mirror of

https://github.com/TencentARC/GFPGAN.git

synced 2026-02-14 21:34:32 +00:00

Are the decoders finetuned? #153

Reference in New Issue

Block a user

Delete Branch "%!s()"

Deleting a branch is permanent. Although the deleted branch may continue to exist for a short time before it actually gets removed, it CANNOT be undone in most cases. Continue?

Originally created by @mchong6 on GitHub (Jan 24, 2022).

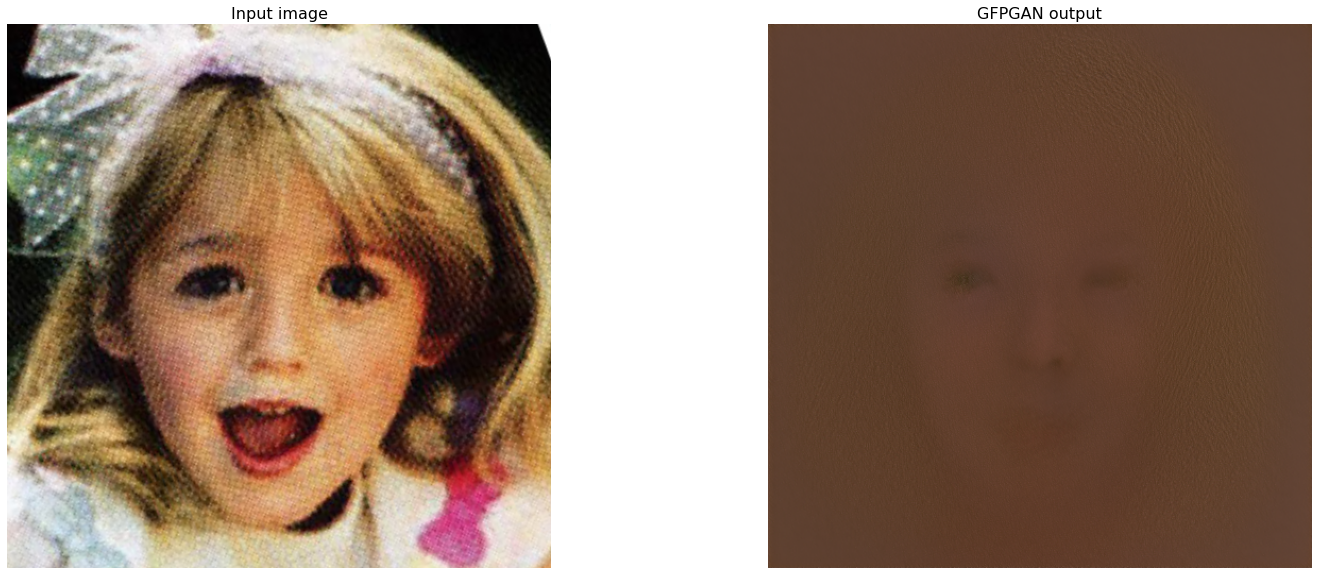

From the training script I dont believe the decoders are being fine-tuned but when I play with the colab code I am getting weird results.

In the colab code, if I make conditions empty, it should return the results without SFT, however, the results are bad.

` image, _ = self.stylegan_decoder(

[style_code],

[],

return_latents=return_latents,

input_is_latent=True,

randomize_noise=randomize_noise)

`

This is the result from setting conditions to empty using the test images. If decoders are not being fine-tuned, this should give proper face results.

@laodar commented on GitHub (Jul 31, 2023):

@mchong6 Have you solved this problem?